Newsletter

Newsletter

After buying Meta’s Ray-Ban smart glasses, Pugpig’s Steve Farrugia reviews current AR technology and considers the future of mobile media consumption.

29th November 2024

In a recent edition of the Media Bulletin, we mentioned how at our away days Team Pugpig discussed the future of media and where technology may be heading in the next few years.

The mobile revolution began with simple phones that connected us via voice and text messaging anywhere and anytime. Smartphones have brought together communications, media, still and video cameras, and internet connectivity for increasingly sophisticated devices. With these devices, we can bank and make payments, have video calls with friends, family, colleagues and customers and access huge libraries of music and movies anywhere.

We thought about which technologies will have the highest impact on media consumption in the future. Steve Farrugia, Pugpig’s Head of Customer Support, had recently bought Meta’s Ray-Ban smart sunglasses. Meta is developing this technology rapidly, and Steve has seen new features added to his smart glasses since he bought them.

For this special edition of the Media Bulletin, he looks at how they fit into his life now and what they might mean for society and media in the coming years.

By Steve Farrugia, Pugpig Head of Customer Support

Nearly three years ago, I became a father. The thing about babies is that they require at least one arm to hold them when they’re asleep and at least two when they’re not. For me, that meant going from reading a newspaper or a paperback to using apps on my iPhone, ebooks on Amazon Kindle and a massive uptick in podcast consumption on Spotify to find out about the world outside our flat.

Fast forward to having a toddler, where they’re constantly displaying new skills or looking cute, and you’re having to shell out for more iCloud storage due to the abundance of memories stored on your phone. While on a beach holiday early this year, I remember thinking about how it would be great to live in the moment and record these memories for posterity, like a Black Mirror episode you might have seen on Netflix.

Not long after, I saw an advert on Instagram for a pair of Ray-Bans with a camera made by Meta.

Naturally, I was intrigued. Sunglasses with a camera feel like a gadget straight out of a sci-fi movie, but the practicality of capturing moments without fumbling for my phone was what sold me. Plus, with a six-week summer trip on the horizon, it seemed the perfect time to put them to the test.

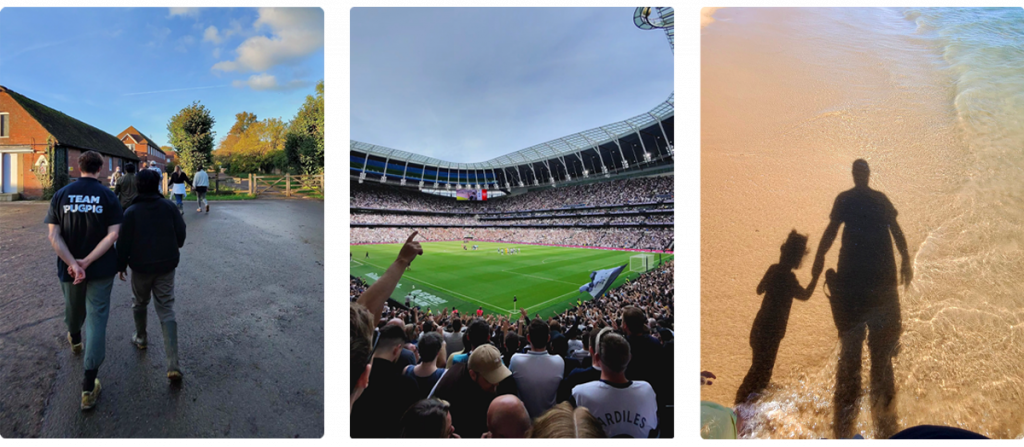

Having bought them primarily for those fleeting, silly moments with my toddler that are gone as quickly as they come, I quickly realised the world is full of moments that benefit from the opportunity the Meta Ray-Bans present. I took them for a spin at the football – pressing a button when it looked like something exciting might happen, and catching the celebration afterwards.

What surprised me was how quickly they became more than just a camera. They came to mostly replace my AirPods over the summer. They’re great for running, not being noticeably heavier than a standard pair of sunglasses. I found many applications for them whether for the POV shot for social media, a nice landscape, or simply the ability to still hear the world around you due to the open design of the speakers. I found myself using them while chilling by the pool or sitting at a café, and the glasses became my go-to for listening to podcasts and taking calls.

My only regret is I wish I had taken the plunge for transition lenses, so they’d be just as useful in winter or while working at my desk. These, plus the option for prescription lenses, push the price up, but I’d expect a healthy ROI.

Meta is developing the glasses rapidly. Software updates over the last few months have added basic voice assistants with Meta AI functionality and promise to use visual cues via cameras. Using the AI voice assistant fixed to your face in the day-to-day could change how people work in the same way ChatGPT has become a core tool for many.

Does having a camera and five microphones on my face throughout my day bother me? Not particularly with the current state of the art, as I know the limitations of battery tech means that the camera isn’t on constantly. Others may be concerned that people are walking around with stealthy recording devices because it’s quite difficult to discern these from standard Ray-Bans, even with the LED status light. But is it any different from using a phone camera?

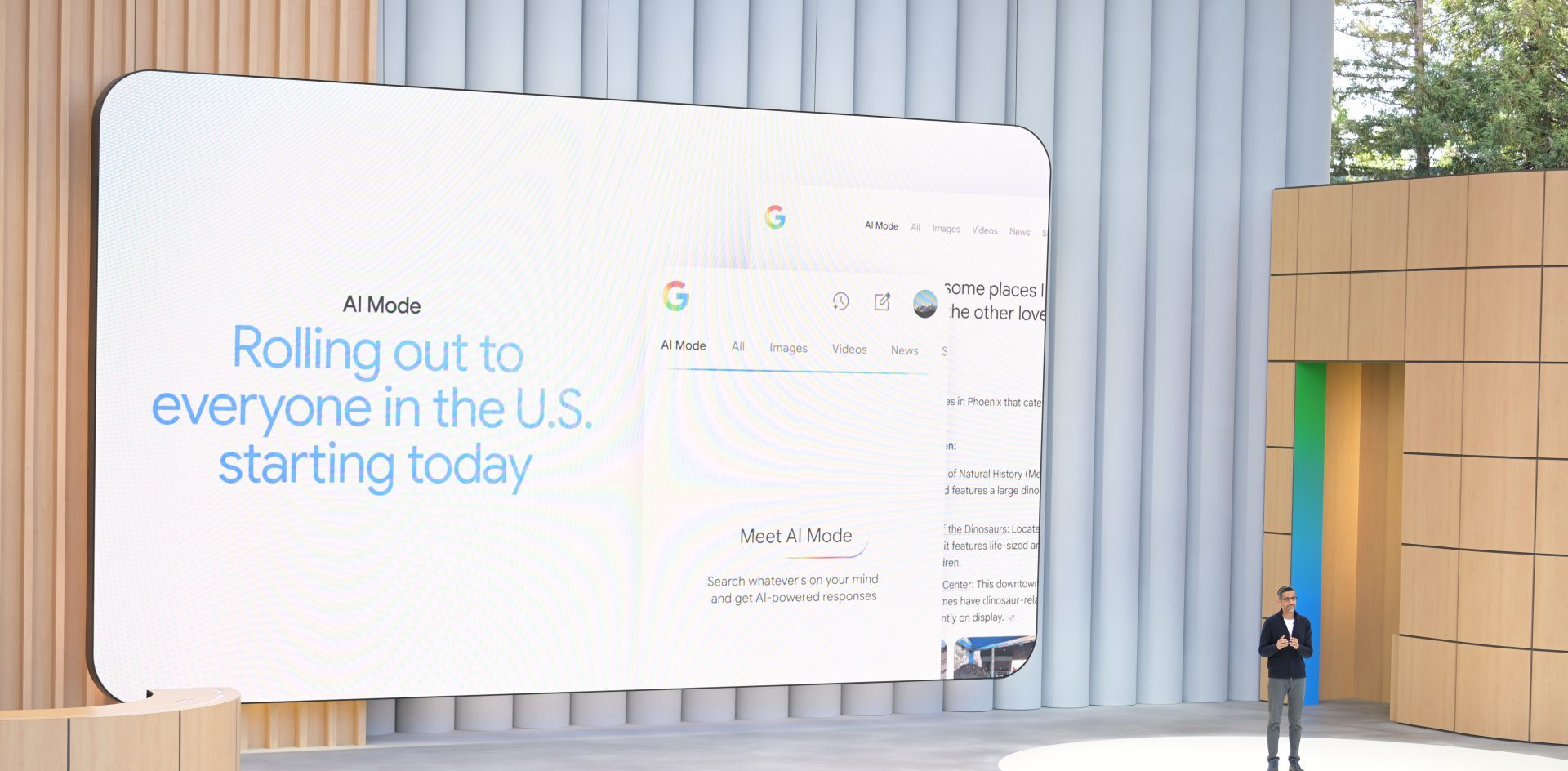

Still, wearing these sunglasses has made me think a lot about where wearable technology is heading. Around the same time I got them, Apple launched the Vision Pro and Meta recently teased its Orion prototype. In five years, Meta has rapidly developed Orion from a system that required a bulky backpack to a pair of glasses and a processing unit not much wider than the glasses themselves. Meta’s vision for this platform is a mixed or extended-reality platform that overlays a computer interface over the real world. The system can already run Meta’s AI-powered smart assistant. They already have a demo where a person looks at some ingredients, and Meta AI comes up with a recipe that uses them.

Imagine pulling up match stats at the football, seeing IMDb details while watching a film, or even reading a newspaper on the lens while having a coffee. Think of travelling across London and getting real-time news updates, targeted by users’ interests and location.

The built-in voice assistant has been another unexpected win. It feels a little futuristic – like the Dot and Bubble from Doctor Who where young adults live through a social media interface projected around their heads – but it’s not hard to imagine. With generative AI advancing so quickly, why would we need to pull out a phone to Google something or chat with ChatGPT? Why not just ask and get the answer directly through your glasses?

And the possibilities go far beyond convenience. What could this technology mean for people with visual or hearing impairments? Could augmented reality enhance accessibility, or even transform how we consume media entirely?

Some apps on the Pugpig Bolt platform let users listen to audio versions of entire editions of newspapers or magazines. It’s an interesting twist on the morning paper – a little different from a podcast, but it works. Why wouldn’t I listen to a newspaper over breakfast if I can’t sit down to read it, especially with a second child on the way? In time, is there going to be an article within the lens of my glasses overlaying the real-life image of my children watching Bluey?

Currently, Pugpig apps are available on the Apple, Google Play and Amazon stores to run on mobile devices, but will we need to start adding support for the likes of the Meta Ray-Bans and Vision Pro? What does that look like? What is the UX of Pugpig Bolt without a screen? These are the questions we’ve started asking.

But whether the future of media is audio-first or some mix of AR and AI, one thing’s certain: the tech is only just getting started, and it’s exciting – if a tad scary – to imagine where it could go next.

Here are some of the most important headlines about the business of news and publishing as well as strategies and tactics in product management, analytics and audience engagement.

Newsletter

Newsletter

Newsletter

Newsletter

Newsletter